It’s always bothered me that LLM benchmarks seem to have leaked all over the internet. We all pretend it’s fine, but it’s obvious they’re all going to end up in the training data and become useless1. So when I recently saw LLMs struggle with a task that isn’t in any of the major benchmarks, I jumped on the opportunity to build a little task specific benchmark.

I was testing a demo of a travel agent, and noticed the LLM struggling to translate phrases like “I’d like to leave for SF tomorrow and stay for a week” into concrete dates for booking a flight. I figured I could generate a few hundred date ranges like that using our evaluation tools2, and threw them at PaLM 2, GPT-4, and GPT-4-Turbo.

Each question looks something like this:

Todays date is November 9, 2023

I’d like to start my trip tomorrow, and stay for a week.

The LLM is prompted to extract the departure and return dates, which I then compare to the correct answer that I saved when generating the question. I use slightly different prompting and output formatting between PaLM and the OpenAI models to try to eke out the best performance on each. I used the same set of 200 questions across each test.

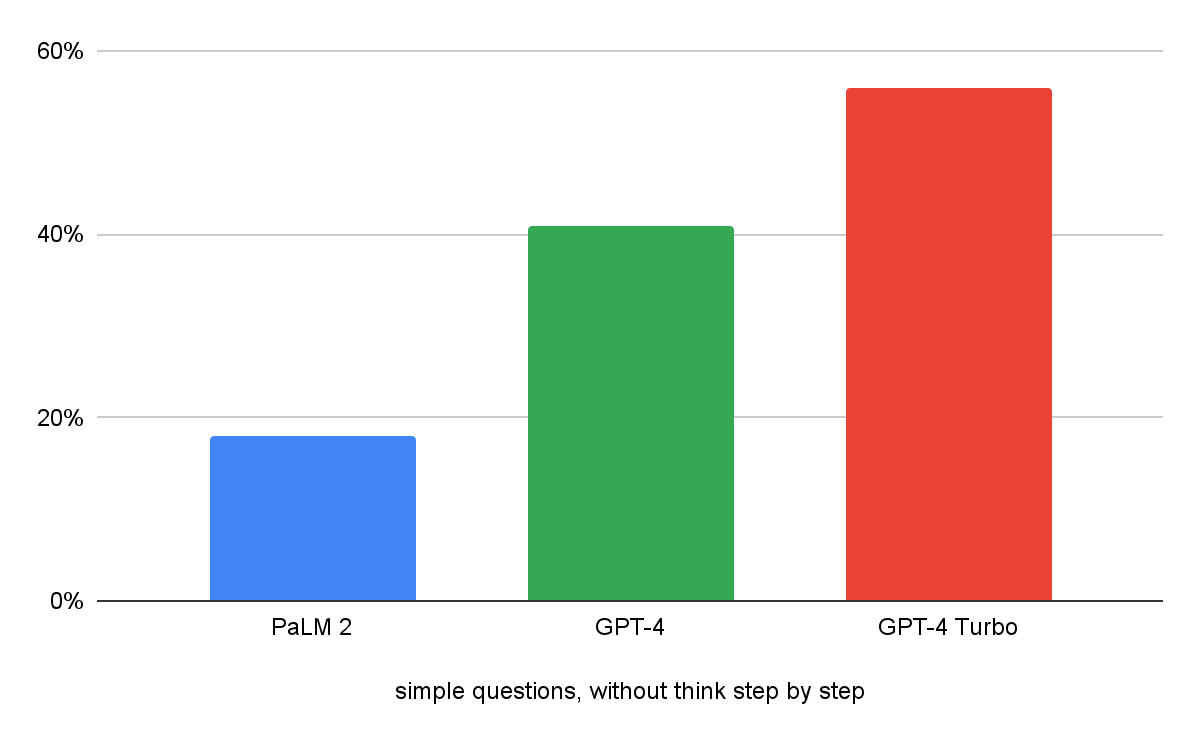

Here’s how they did:

All models are really struggling here, with GPT-4 Turbo performing the best with 56% correct answers. Original GPT-4 struggles at 41%, but still gets more than twice as many questions right as PaLM 2 at 18%.

Looking at the raw output from this, it immediately jumped out that GPT-4 Turbo is using chain-of-thought or “think step by step” reasoning by default.

Question: Today is 04/17/2023. Leave five weeks from today and stay for three weeks.

Response: To calculate the start date, add five weeks to today's date (04/17/2023). There are 7 days in a week, so five weeks is 5 * 7 = 35 days.

Start Date Calculation:

04/17/2023 + 35 days = (04/17/2023 + 35 days)

Since April has 30 days, adding 35 days to April 17th would spill over into May.

The remaining days after April is 35 - (30 - 17) = 22 days.

So, the start date is May 22nd, 2023.

To calculate the end date, add three weeks to the start date (05/22/2023). Three weeks is 3 * 7 = 21 days.

End Date Calculation:

05/22/2023 + 21 days = (05/22/2023 + 21 days)

May has 31 days, so adding 21 days to May 22nd is straightforward and would result in June:

Remaining Days in May: 31 - 22 = 9 days.

9 + 21 = 30, so we go to the next month, which is June.

The end date is June 12th, 2023.

Formatted date range: 05/22/2023-06/12/2023.

The other models are just spitting out an answer without reasoning steps. To make this comparison more fair, I’ll try adding a similar step-by-step prompting technique to all of the models to see how they perform. I’m not going to dive into really optimizing the prompt here, but did some simple tweaking to get that “think step by step” result:

The key takeaway: this really helps both PaLM and GPT-4, but it isn’t enough for PaLM to catch up to even the original GPT-4 score. It remains totally unviable to use Google’s model in anything serious — we’ll see if this changes when they release their Gemini model.

It’s also interesting that GPT-4 Turbo gets worse with “think step by step” prompting despite being asked the exact same questions3. If GPT-4 turbo consistently does worse with this style of prompting, it could really throw off some developers. If you’ve seen anything similar (or that contradicts this) let me know – I’d love to build out a coherent picture of what’s happening here.

Arguably this has already happened, but as long as the benchmark score goes up for new models no one cares to look into it.

It’s possible to do this because it’s easier to generate these date questions than it is to answer them. I can pick a start date, add a random number of days or weeks using date math, then turn that into natural language to do the evaluation. I save the original dates to use for grading. If you have a custom use case for this, reach out.

It may be that they have it set up with a “thinking” strategy that works well, and I messed it up by adding my simple one. It’s hard to say for sure, but fun to speculate.